What’s This All About

This article started life with the title “Five Reasons I Hate Python”.

What started as a rant, morphed as I went through months of working with a significant Python programme. I still believe some of what I originally thought, but it is now balanced with a modicum of respect for the language.

The “Hate” stemmed from where I came from; 5+ years of working with R, and - prior to that - decades of working with C++ and then C#.

Fundamentally, this comes down to an intense dislike for loose

and dynamic typing (really, no typing).

A minor secondary “grump” is it’s use of white-space to denote nesting

(instead of {...} as used by C++, C#, Java, Ruby, etc.).

But this does have a real impact! We’ll return to both of these later.

As a seasoned Software Engineer, Data Engineer, and Data Scientist, I have been responsible for crafting large production code-bases that have been deployed globally and have stayed the course of time for decades.

I write this now as an exposé on the Python language, looking at why you should use it, why (when) you shouldn’t, and some things you should know if you’re going to use it.

Python - An Introduction

Python has shot up in popularity over recent years.

When creating the web-site was “the thing to do”, Python was a significant player (Zope, Pylons, Django), alongside Ruby on Rails.

Then, Data Science happened.

The Big Boys used to rule that with SAS, SPSS and MatLab. But the rise of Open Source shifted that, with R and Octave in particular

Python programmers wanted a piece of that action, and started to re-implement some of R’s most successful features:

- R’s built-in data.frame inspired Pandas

- R’s ggplot2 inspired Python’s plotnine, ggplot, and too a lesser extent, seaborn.

- scikitlearn instead of the many R stats libraries

- Jupyter notebooks instead of RMarkdown / R Notebooks

Python is now a single language who’s libraries will allow this one language to:

- Configure your cloud infrastructure with Ansible

- Scrape websites with BeautifulSoup

- Get other data from AWS S3 or Google Cloud Storage smart_open

- Database ORM access with SQLAlchemy

- Graph analytics with NetworkX

- Language Analysis with nltk

- Data Science modelling with scikit-learn

- Build your web-site in Django

- Data visualisation with seaborn

- Build a Minecraft clone with pyglet

- Generate your API documentation using Sphinx

- Generate your UML class diagram with

pyreversefrom pylint

That’s an amazing breadth of functionality.

Python’s Success

I think four things have been fundamental to Python’s growth and success.

In addition to these (or even because of these) Python is now:

- a popular educational language both in schools and universities - people are now coming into the work-place already familiar with Python

- supported / used heavily by Google, Microsoft and AWS

Arguably, Python is now the de-facto scripting language, displacing both Perl and Ruby, and is now even often a contender over statically-typed languages such as Java, C# and C++ for many use-cases.

Guido van Rossum & PEPs

Guido van Rossum - Python’s creator and “Benevolent dictator for life” (BDFL), and Python’s evolution process, as defined via PEPs - “Python Enhancement Proposals”.

Both of these have lead to Python being a rapidly evolving language, not slowed down by the consensus required by ISO standards and committees (as C++ is).

pip

pip - the Python Package Installer.

(or maybe the “Python Installer of Packages”? - just a thought 😉.)

This is now the de-facto way of deploying Python libraries, and makes

that long list of extremely powerful libraries available to you with a

quick pip install my-favourite-library.

Virtual Environments

Virtual Environments - now (Python ≥ 3.3) through venv, and formally though virtualenv.

“Virtual Environments” are Python’s way of saving you from what C & C++ developers would call “DLL-hell”, the problem of having multiple, incompatible pre-requisites, and of code failing when it gets promoted to production due to differences in dev / test / production environments.

Continuum Analytics

Continuum Analytics, now simply “Anaconda”, developed the “Anaconda” platform, which was - and still is - a very popular platform for distributing Python and other Data Science tools. This makes installing a rich Python environment on Microsoft Windows much easier, opening up Python more easily to the Windows community, and even used by Microsoft to bring Python to their Machine Learning Services for SQL Server.

Python’s Weaknesses

I’m going to dig into four specific areas of Python that I find troublesome and annoying. These are based on decades of writing and reading code, of having it execute, and of having to debug issues when it doesn’t produce the expected results.

Typing System

At it’s core, Python is a dynamically typed language.

That means you can pass any variable, any literal, to any function, method or operator, and then they’ll “try and do their stuff” and may blow up if the “type” of the object passed wasn’t appropriate.

Another phrase that goes with “dynamically typed” is “duck-typed” - if it walks like a duck and talks like a duck then its a duck. The flip side; if you’re expecting a duck, treat it like a duck, but you may never really know if it is a duck.

What that means is that you’ll likely only get to see such type-violation problems at run-time. I’m old-school, I used to like it that compilers catch bugs for me before it gets deployed to production or sold to customers

Additionally, objects in Python started out as dictionaries with some additional garnish. Any code could therefore add any attributes to any object it had access to - the “Christmas-tree” phenomena, where code can attach any attributes and objects to the “Christmas-tree” object.

This even applies to object methods; which can be overridden and replaced.

Now, this has been a benefit to me: one of my early projects injected shims

into pandas.read_csv, pandas.read_excel and pandas.write_csv as a way

to obtain a dependency graph between data assets. This is trivially easy

in Python because of it’s “permissiveness”.

There are cool tricks that can be pulled off because of Python’s type-permissiveness. But it comes at the cost of casting “doubt” on what the code in your project might be getting up to, and makes it easy to make transgress design decisions accidentally.

Mitigants

Type-hints

Type-hints were added to Python 3.5 through PEP 484.

Running pylint or mypy as part of the development process will

flag type violations using static code analysis.

Additionally the type hints can supplement docstrings (see later) to augment generated documentation.

Dropbox has written about how they added type-checking to their 4 million lines of Python code.

Slots

Python 2.2 added the option of using slots instead of the default dictionary for storing object attributes.

At the time, these provided a significant space-saving (less so

since Python 3.3 introduced key-sharing dictionaries in

PEP 412) but brought the

useful side effect in disallowing the assignment of arbitrary

attributes to objects. (You can actually re-enable this by adding

__dict__ as one of the entries in __slots__ if needed!)

Performance

The default Python interpreter (CPython) has three things that reduces it’s performance.

Some of these are even a requirement of the Python specification.

Everything’s an Object

Even integers are objects.

Human-beings can get surprised that integers have an upper-limit in many programming languages.

Many programmers will get surprised that Python doesn’t.

An integer in modern C, C++ & Java has a range of

\(-2^{31}\) (-2147483648) to \(2^{31}-1\) (+2147483647)

and require 4-bytes of storage.

import sys

for x in (1, 127, 200):

x = 2 ** x

print('Value %i' % x)

print('Size: %i' % sys.getsizeof(x))

## Value 2

## Size: 28

## Value 170141183460469231731687303715884105728

## Size: 44

## Value 1606938044258990275541962092341162602522202993782792835301376

## Size: 52

This makes Python “a language for humans” rather than a “language for programmers”. It can cause a degree of shock for them. It can also worth highlighting that division is more aligned with human expectation than by programmers’ expectation.

The humble integer is an object with a rich set of methods.

x = 2

print(type(x))

print(dir(x))

## <class 'int'>

## ['__abs__', '__add__', '__and__', '__bool__', '__ceil__', '__class__', '__delattr__', '__dir__', '__divmod__', '__doc__', '__eq__', '__float__', '__floor__', '__floordiv__', '__format__', '__ge__', '__getattribute__', '__getnewargs__', '__gt__', '__hash__', '__index__', '__init__', '__init_subclass__', '__int__', '__invert__', '__le__', '__lshift__', '__lt__', '__mod__', '__mul__', '__ne__', '__neg__', '__new__', '__or__', '__pos__', '__pow__', '__radd__', '__rand__', '__rdivmod__', '__reduce__', '__reduce_ex__', '__repr__', '__rfloordiv__', '__rlshift__', '__rmod__', '__rmul__', '__ror__', '__round__', '__rpow__', '__rrshift__', '__rshift__', '__rsub__', '__rtruediv__', '__rxor__', '__setattr__', '__sizeof__', '__str__', '__sub__', '__subclasshook__', '__truediv__', '__trunc__', '__xor__', 'as_integer_ratio', 'bit_length', 'conjugate', 'denominator', 'from_bytes', 'imag', 'numerator', 'real', 'to_bytes']

And some interesting operator semantics that, again, are “human” rather than “programmer” centric.

print(3 / 2)

print(3 // 2)

## 1.5

## 1

None of this is “bad”, but can be a big surprise from programmers coming from compiled languages or Java.

However, it has consequences for memory overheads, and increases then for cost of function and method invocations.

No Efficient Typed Arrays / Vectors

Almost a natural consequence of the above, if I want an array of integers, it will cost you loads.

import sys

from pympler import asizeof

x = list(range(0,1000))

print('Size of list: %i' % sys.getsizeof(x))

print('Size of list and values: %i' % asizeof.asizeof(x))

## Size of list: 8056

## Size of list and values: 40048

So, Python will natively use 41kb for a list of 1,000 integers.

In comparison, a vector of 1000 integers in R will take:

x <- 1:1000

object.size(x)

class(x[100])

## 4048 bytes

## [1] "integer"

less than \(\frac{1}{10}\) of that.

And will promote the whole array to doubles if the space becomes insufficient.

x[100] = 2 ** 300

object.size(x)

class(x[2])

is.integer(x[2])

is.double(x[2])

## 8048 bytes

## [1] "numeric"

## [1] FALSE

## [1] TRUE

And will promote the whole thing to character if you assign a string

to a single element.

x[100] = "Hello"

object.size(x)

class(x[2])

## 64048 bytes

## [1] "character"

(R offers a list type if you want an array of non-homogeneous types.)

So, natively Python is a dreadful world in which to do large-scale data.

But what about Pandas and NumPy you say? Let’s take a look at that…

Boxing, Unboxing, and Type Obfuscation

Pandas claims to be “a fast, powerful, flexible and easy to use open source data analysis and manipulation tool, built on top of the Python programming language.”

I have to say that that’s a lie.

It’s build underneath the Python programming language, in C. From https://pandas.pydata.org/about/: “Highly optimized for performance, with critical code paths written in Cython or C”.

The same is true of NumPy.

Both of these libraries use a more efficient storage representation that would be more familiar to C programmers (or R programmers). There are five down sides of this:

- If you actually want to gain access to one of these variables, it will need conversion between the Python native format and the optimised C format.

- In consequence, it’s pointless to use Python code to read and write these

objects; Pandas implements it’s own

read_csvin order that data can be read directly into the optimised storage format. - Ditto for writing.

- Pandas does not expose any “character array”; such an arrays always appear to have the type “object”, as will any non-homogeneous array.

- You can’t have NULLs in integer arrays. Pandas chose to use

NaN

(Not A Number) for it’s NULL, and this is only available in floating-point

representations. Therefore a NULLable integer type will actually be stored as

floating-point.

As a result of these last two, you can’t load a CSV in Pandas and easily determine the true types of each column.

R makes this easy, and has one of the best CSV reader functions in the world

through the readr library.

Mitigants

Firstly, beware of “premature optimisation”.

(I can say this because I’ve suffered from this condition

for decades!)

Libaries written in C / C++ / Fortran

You can get memory efficient arrays and matrices through

numpy. Numpy is a Python library

wrapper around optimised memory structures and C and C

code that compiles down to

SIMD

assembler instructions, typically via

BLAS.

PyPy

To optimise your numerically intensive Python code as well as across function calls, try PyPy. Rather than a direct interpreter written in C (CPython), PyPy uses a JIT (just-in-time) compiler generator and a LLVM (low-level-virtual-machine) to improve performance.

Generators and Co-routines

Generators and Co-routines allow for the generation and consumption of data (messages) from state-full functions and methods with a much lower overhead from full function calls. Additionally, their structure makes it very convenient for establishing pre-setup and post-tear-down with guaranteed execution. This is otherwise hard to establish in lazy garbage collection languages where RAII (Resource acquisition is initialization) idioms are ineffective due to the lack of destructors.

Cython

Cython allows for a Python-like language to be translated to C and compiled. Intended to simplify the creation of C extension for Python, it can be used for compiling executables that leverage a mixture of Python and C variable types.

I’ve not yet experimented with Cython. It sounds very promising. The reason why I’ve not had a go yet boils down to it’s complexity. That PyPy is a direct replacement for the CPython interpreter makes it a much more attractive proposition.

Jupyter Notebooks

I’ve been using RMarkdown “notebooks” (note, not RNotebooks) as my go-to exploratory structure for over 5 years.

You can combine R, Python, BASH, SQL, Fortran, Julia, C++, Scala, Octave, Haskell, Perl; all in a single file.

You can commit the files to git easily and use git diff

to see changes easily.

You can “knit” these documents into HTML, PDF, or full web sites. Even into Microsoft Word and PowerPoint documents.

So, why do I cite Python’s Jupyter Notebooks as a weakness?

Let me tell you:

- You only get to use one language in each Jupyter Notebook

- Jupyter writes it’s output into its source file, leading to large “binary” objects (e.g. images) being included in “source” files, that are now ballooned. This is troublesome for any source control system, and means that using differencing tools have to deal with masses of “inconsequential” changes, rather than focusing on the source changes. It is one of Jupyter’s poorest design choices in my opinion.

- Jupyter wasn’t intended to be used to produce “output” documents or for producing publication ready reports, or even for reproducible research.

- On the reproducible research front, notebooks (and I include RMarkdown here) encourage developers and scientists to go backwards and forwards and run code-chunks out of sequence as they develop and refine their work. The saving grace in the R world is that, at the end of all of that, the document will usually be knitted to HTML or PDF in a clean environment. Jupyter’s lack of a “final output” target makes it more likely that the final “clean run” may not occur.

- Expose you to the fact that Jupyter runs as it’s own web-server that you then connect to with a web browser. For this reason alone, I’d rather develop my Python notebooks in VS Code, which uses IPython - the Python language kernel used by Jupyter - to power it’s own Python Notebook environment.

Mitigants?

I use RStudio and RMarkdown documents, even for my Python notebooks!

Alternatively,

pre-commit-hooks

have been developed for git to strip the “output” parts of Jupyter notebooks prior

to being committed to at least overcome that issue.

An additional down side is that these hooks have to be set up for every single user that checks out the repository. This is perhaps another reason why Jupyter is predominantly used as a server side-technology, as then only a single server needs this configuration and it can be hidden from end-users.

White-space

So, gone are the curly braces - {...} - and all the heated arguments

about where they should be placed.

These were the delimiter of choice in many languages for showing blocks of

code, such as which lines should be considered the body of an if statement or

for loop.

Python took a different path, by using indentation.

So, instead of curly brackets in C:

#include <stdio.h>

int main() {

printf("Hello World\n"); // say hello world

}

we see:

def main():

print("Hello World") # say hello world

main()

Code Editing & Navigation

The problem becomes when you have deeply nested code (arguably this is an indication of poor design and the need for refactoring, but it will occur frequently in the real world, especially under time pressure).

In deeply nested code, you can’t easily see “end-points” of the block.

In many code editors, hitting Ctrl-[ when the cursor is next to one of

these brackets will take you to its matching one - this makes for very rapid

“code cruising” when you’re either reviewing or getting to know code.

Templating

Additionally, the dependency on white-space makes it difficult to engineer a usable templating language based on Python.

The de-facto templating engine in the Python world is jinja2.

However, when you write code blocks in jinja, you don’t really get to write Python code; you have to write code that doesn’t look like Python, that uses a bunch of jinja specific idioms.

So, to use jinja, you have to learn another - very constrained - language. Jinja doesn’t even come with an easy way to add a new key to a Python dictionary.

Contrast this with

Ruby’s ERB,

R’s brew package, or even Microsoft’s

T4 Templates,

all of which allow you to use the full expression of their “host” language

(or environment - T4 Templates can use any .Net language) inside of them.

Mitigants

Suck it up, or use a different language. You don’t necessarily need to convert you’re whole Python program, but then you find yourself in a polyglot programming experience, and shell scripts and makefiles will likely need to come into play (or at least one of these).

You can refactor your code into smaller functions, to make them easier to read, but each one will introduce additional function call overheads, which are high in CPython.

Python’s Pleasent Suprises

Sorry, I can only find one:

BETWEEN for Humans

There is one piece of Python syntax that surprised and delighted me:

age = 10

if 0 <= age < 18:

print("This is a child")

This does exactly what the human reader expects. I lover the brevity of it,

I love the DRYness,

I love that it can be left/right inclusive/exclusive as the programmer requires it

(as opposed to SQLs BETWEEN).

When to use Python

- When your background is “human being” rather than “computer scientist” / “programmer”

- When your program is small

- When you are using Python as “glue”

- i.e. the heavy-lifting is being done by packages written in C, C++, etc..

- When you want something more portable than a BASH script

- You’re betting on a single toolkit for an unpredictable set of problems that

you may need to solve. Learning multiple languages takes time; if you need to bet

on a single horse, Python is a good place to lay your wager.

In reality, if you’re starting something that you think could evolve into a large project, have an experienced polyglot programmer take a look at the project and recommend the set of languages and where the language boundaries should lie.

This should include documentation languages such as markdown, reStucturedText,

dot, PlantUML; build systems such as

GNU make or

Rake; and SQL build systems

such as dbt.

When NOT to use Python

- Very large or complex programs that you want to be bug-free

- When performance matters

- When you want to produce high-quality and / or multi-format static reports

- When your code heavily uses regex’s, such as parsers and document translators / modifiers. (Perl will give you a much more concise program.)

And this is personal opinion. I’ve already mentioned Dropbox has over 5 million lines of Python code, so it’s not impossible.

What to do When you use Python

Document Your Code

Make your code legible, and produce great documentation:

- Use type-hints a. Use mypy to run static code analysis over the top of the type-hints and find bugs for you

- Use docstrings a. Use Google-style docstrings as they are significantly easier on the eye when you’re reading them in the actual code (rather than in generated documentation). b. Don’t put you’re type information in docstrings, use the type-hints per (1).

- Use doctests to both document and test your code.

- Use type-hints for object attributes

- Use attribute docstrings to document

significant object and class attributes. Better still (but less well supported) are

“doc comments” using

#:that can be place before attributes or as in-line (end-of-line) comments. - Use sphinx to generate great documentation a. Use napoleon to process Google-style docstrings. b. Use autoapi to automatically generate documentation for all of your Python sources. c. Install recommonmark so that you can also write “simple” documentation in Markdown, which is easier on the eye that Sphinx’s default reStructuredText

pyreversefrompylintcan be used to reverse engineed UML diagrams from your Python code.

Code versus Config

In these days of the cloud and “software defined infrastructure”, configuration has become king, being preferred over pure code, and “domain specific languages” (DSLs) have become common-place.

But Python - in large part, due to it’s white-space nature - is a poor language for DSLs.

However, JSON, or (better still) it’s legible counterpart YAML can be used to describe quite complex structures in a concise way.

These can then be included in Python packages (including zip files) and loaded using Python’s

importlib.

Yes, you could always have just embedded a string in a normal Python package, but then you’d loose the syntax highlighting in your favourite editor, and wouldn’t be able to re-purpose the config information in additional use-cases. (E.g. in other languages such as BASH scripts.)

Some Performance Suggestions

Use PyPy over CPython when you have lots of Python code “doing stuff” (rather than just calling library functions).

Use Generators and Coroutines

- Use generators

(a special type of iterator)

with

withclauses (a.k.a. “context managers”) to abstract away “boiler plate” code for reading files, querying databases, etc.. - Use coroutines when building data-pipelines.

- Use generators

(a special type of iterator)

with

Both generators and coroutines has less overhead than function invocations, and allow for a great use of setup and tear-down code.

Use

cProfileto profile your code and find out where it’s really spending it’s time. But also beware of trying to optimise any imput / output code - you may be waiting for IO rather than consuming CPU, and you can’t simply optimise that.Don’t use any form of multi-threading. If you think you need multi-threading, you probably need multi-processing. Work out how to split your working into totally independant chunks and use GNU Parallel to run a bunch of concurrent Python (or PyPy) processes.

IDE / Editor

I have only lightly tried out PyCharm, which is one of the most popular Python editors / IDEs. It simply didn’t come naturally to me. Caveat I grew up with Delphi and Visual Studio.

I’ve spent that last 6 months heavily using Visual Studio Code

(VS Code) and have been incredibly impressed by it,

especially by the breadth of add-ins and syntax highlighting for Makefiles,

dot files (GraphViz), YAML files, sed, awk and even Perl files (I have 2 lurking in my

current project!)

It also has great integration with Python’s debugger, allowing for setting breakpoints

(including conditional breakpoints),

and debugging windows for call-stack, local variable, and an expression watch window.

Seriously, Microsoft’s development environemnts (Visual Studio and VS Code) have become awesome since hiring Anders Hejlsberg the programmer behind both Turbo Pascal and Delphi.

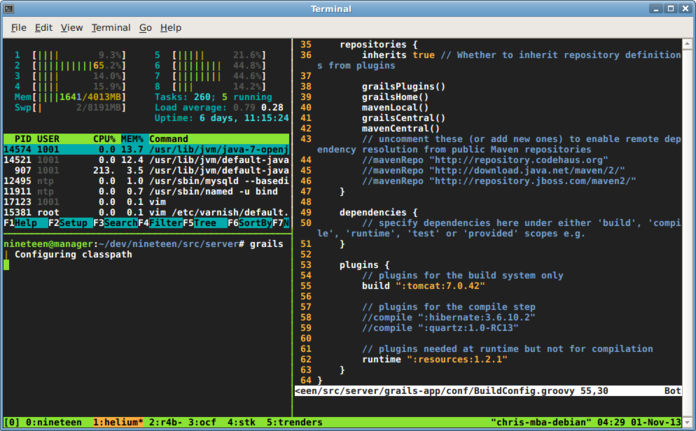

When living on the terminal (e.g. AWS EC2 instances) I’ll use a combination of

vim and nano, in a multiple-pane

tmux (1, 2)

setup:

Windows Development

There are two main options for running Python on Windows.

Both distributions inherit a tension created by the Windows eco-system.

Just installing Python for Windows in unlikely to provide you enough of an ecosystem for a professional developer. (But OK for your kids to learn programming.)

The tool-chains for building Python and it’s C-based extensions doesn’t sit nicely on Windows, having been designed as Open Source, by an Open Source community predominantly working on Linux. The plus to this is you can install the same Python on your Linux desktop or server, a Raspberry Pi, and your Android phone. The down side is that Windows (out of the box) will not be able to build / install many of the Python packages as it doesn’t (by default) come with or give you easy access to a C / C++ compiler.

In consequence, before you try and install a Python package with pip, you should look

to see if their package managers provide a pre-compiled binary for Windows, to save on a

build process that may fail.

Anaconda has a package manager called

conda, MinGW uses the

pacman package manager ported

from ArchLinux.

Note that Anaconda is also supported on OSx (Mac) and Linux, and so can be used as a cross-system platform.

On Windows, I personally prefer to use MinGW, as I then have access to a host of GNU

tools, such as make, sed, awk, perl, bash, etc..

Anaconda

Anaconda - originally the name of the package, now the name of the company who built it, formally called Continuum Analytics - is one of the most popular and easy to use Python distributions.

And it’s not just for Python. You can install other Data Science tools, such as R.

MinGW

Or “Minimal GNU for Windows” is a set of GNU packages natively compiled for Windows. These run faster than their equivalent Cygwin packages, as Cygwin compiles these tools on top of a Linux compatibility layer, which is a rather expensive shim.

However, there is then a host of GNU build tools that become available, and be installed without admin permissions, and will work in concert.

My personal environment currently has the following MinGW packages installed:

# To generate:

# pacman -Qqet > MinGW64.pkglist.txt

# To install in new environment:

# sed -e 's/#.*//' MinGW64.pkglist.txt | pacman -S --needed -

asciidoc

autoconf

autoconf2.13

autogen

automake-wrapper

base

bison

btyacc

cocom

dos2unix

flex

gcc-fortran

gdb

gettext-devel

git

git-extra

gperf

help2man

intltool

lemon

libcrypt-devel

libtool

libunrar-devel

make

man-db

mc

mingw-w64-x86_64-clang-tools-extra

mingw-w64-x86_64-cython

mingw-w64-x86_64-gcc-fortran

mingw-w64-x86_64-git

mingw-w64-x86_64-git-doc-html

mingw-w64-x86_64-git-doc-man

mingw-w64-x86_64-graphviz

mingw-w64-x86_64-perl-doc

mingw-w64-x86_64-python-colorama

mingw-w64-x86_64-python-cryptography

mingw-w64-x86_64-python-ipython

mingw-w64-x86_64-python-mypy_extensions

mingw-w64-x86_64-python-pip

mingw-w64-x86_64-python-pylint

mingw-w64-x86_64-python-sphinx

mingw-w64-x86_64-python-virtualenv

mingw-w64-x86_64-python-wheel

mingw-w64-x86_64-xxhash

nano

openbsd-netcat

p7zip

pactoys-git

parallel

patchutils

pkgconf

python-pip

quilt

rcs

reflex

rsync

ruby

scons

swig

texinfo

texinfo-tex

tmux

tree

ttyrec

unzip

xmlto

zsh

Finally - The Unix Philosophy

I would summarise the Unix philosophy as “write a program that does one thing well, then combine it with other programs.”

This is why shell scripts are so prevalent on Unix / Linux. They are the glue that combines. (Or - if more lifting power is needed - then Perl.)

While Python can be used to monolithically build a single program that does “everything”, don’t underestimate the reusability and developer productivity that comes from combining many smaller programs. Each one will be easier to develop and test, and initial use of the system can all be tested and delivered via the command line.

This also provides for an environment where we use “the right tool for the right job”, where elements can be leveraging Perl for parsing, Ruby for a DSL, etc..

The biggest issue with command line apps is parsing their arguments, and Python

has a brilliant framework called

argparse

for helping with that.

Postscript

True to what I said much earlier, this document written in RMarkdown (1, 2) using RStudio preview edition 1.4.11.

All Python and R code in this document were executed as this document was knitted to produce it’s output.

The libraries and environments were as follows:

sessionInfo()

## R version 4.1.0 (2021-05-18)

## Platform: x86_64-pc-linux-gnu (64-bit)

## Running under: Debian GNU/Linux 11 (bullseye)

##

## Matrix products: default

## BLAS: /usr/lib/x86_64-linux-gnu/atlas/libblas.so.3.10.3

## LAPACK: /usr/lib/x86_64-linux-gnu/atlas/liblapack.so.3.10.3

##

## locale:

## [1] LC_CTYPE=en_AU.UTF-8 LC_NUMERIC=C

## [3] LC_TIME=en_AU.UTF-8 LC_COLLATE=en_AU.UTF-8

## [5] LC_MONETARY=en_AU.UTF-8 LC_MESSAGES=en_AU.UTF-8

## [7] LC_PAPER=en_AU.UTF-8 LC_NAME=C

## [9] LC_ADDRESS=C LC_TELEPHONE=C

## [11] LC_MEASUREMENT=en_AU.UTF-8 LC_IDENTIFICATION=C

##

## attached base packages:

## [1] stats graphics grDevices utils datasets methods base

##

## other attached packages:

## [1] bit64_4.0.5 bit_4.0.4 reticulate_1.20 pacman_0.5.1

##

## loaded via a namespace (and not attached):

## [1] Rcpp_1.0.7 knitr_1.33 magrittr_2.0.1 rappdirs_0.3.3

## [5] lattice_0.20-44 R6_2.5.0 rlang_0.4.11 stringr_1.4.0

## [9] tools_4.1.0 grid_4.1.0 xfun_0.24 png_0.1-7

## [13] jquerylib_0.1.4 htmltools_0.5.1.1 yaml_2.2.1 digest_0.6.27

## [17] bookdown_0.22 Matrix_1.3-4 sass_0.4.0 evaluate_0.14

## [21] rmarkdown_2.9 blogdown_1.4 stringi_1.7.3 compiler_4.1.0

## [25] bslib_0.2.5.1 jsonlite_1.7.2

The above declares everything that was used to create this, making this

information and the source *.Rmd file everything you need to exactly

reproduce this document.